TensorFlow vs PyTorch: In the realm of machine learning, these two frameworks stand out as powerful tools with unique capabilities. Let’s delve into the intricacies of each and explore how they shape the landscape of AI technology.

As we navigate through the contrasting features and functionalities of TensorFlow and PyTorch, a deeper understanding of their strengths and weaknesses emerges, paving the way for informed decision-making in the world of artificial intelligence.

Introduction

TensorFlow and PyTorch are two popular deep learning frameworks widely used in the field of machine learning and artificial intelligence. These frameworks provide tools and libraries that enable developers and researchers to build and train neural network models efficiently.

TensorFlow, developed by Google Brain, offers a comprehensive ecosystem for machine learning tasks, including image recognition, natural language processing, and more. On the other hand, PyTorch, developed by Facebook’s AI Research lab, is known for its dynamic computation graph, making it easier for users to define and modify neural networks on the go.

Popularity and Community Support

- TensorFlow has been around since 2015 and has gained widespread adoption in both academia and industry. Its popularity can be attributed to its strong backing by Google and its extensive documentation and resources.

- PyTorch, although newer compared to TensorFlow, has been rapidly growing in popularity due to its user-friendly interface and flexibility. It has garnered a strong community following among researchers and developers.

- Both frameworks have active communities that contribute to the development of new features, tutorials, and resources. TensorFlow’s community is larger due to its longer presence in the market, while PyTorch’s community is known for its responsiveness and support.

Architecture and Design

When it comes to the architecture and design of deep learning frameworks like TensorFlow and PyTorch, understanding the underlying structures is crucial for developers and researchers.

TensorFlow Architecture

TensorFlow follows a static computational graph approach, where you define the computational graph first and then run the session to execute it. This graph represents the flow of data through the network, making it efficient for distributed computing. TensorFlow’s architecture is based on a dataflow graph where nodes represent operations and edges represent data tensors flowing between them. This design allows for easy parallelism and optimization during training.

PyTorch Architecture

On the other hand, PyTorch follows a dynamic computational graph approach, which means the graph is created on-the-fly as operations are executed. This dynamic nature provides more flexibility and ease of use compared to TensorFlow. PyTorch uses a technique called define-by-run, allowing for more intuitive model building and debugging. The architecture of PyTorch is more imperative and Pythonic, making it popular among researchers and developers for its ease of use.

Key Design Differences

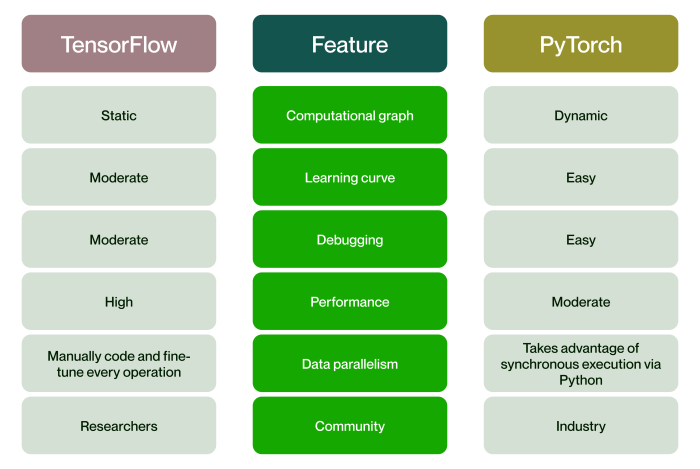

One of the key design differences between TensorFlow and PyTorch lies in their approach to defining and executing computational graphs. TensorFlow’s static graph can be more efficient for large-scale production deployments and optimization, while PyTorch’s dynamic graph provides more flexibility and ease of use for experimentation and research. Additionally, TensorFlow has a steeper learning curve due to its static nature, while PyTorch’s define-by-run approach is more intuitive for beginners. Overall, the choice between TensorFlow and PyTorch often comes down to the specific requirements of the project and the preferences of the user.

Ease of Use: TensorFlow Vs PyTorch

When it comes to ease of use, the installation and setup process can play a crucial role in determining how quickly users can start working with a framework. Let’s compare the installation and learning curve of TensorFlow and PyTorch.

Installation and Setup of TensorFlow

Installing TensorFlow can be a straightforward process, especially with the availability of tools like pip for Python packages. Users can easily install TensorFlow using pip with a simple command, making it accessible to a wide range of users. Additionally, TensorFlow provides detailed documentation and resources to guide users through the installation process, making it user-friendly.

Installation and Setup of PyTorch

On the other hand, installing PyTorch can also be done using pip, similar to TensorFlow. However, some users may find the installation process for PyTorch to be slightly more complex compared to TensorFlow. While PyTorch also offers detailed installation instructions, some users might encounter dependencies or compatibility issues during the setup process.

Learning Curve

In terms of the learning curve, TensorFlow and PyTorch have their own strengths and weaknesses. TensorFlow, with its high-level APIs like Keras, can be easier for beginners to grasp and start building models quickly. On the other hand, PyTorch is known for its dynamic computation graph, which can offer more flexibility and control to users but might have a steeper learning curve, especially for those new to deep learning frameworks.

Overall, both TensorFlow and PyTorch provide comprehensive documentation and resources to help users navigate the installation process and learn how to use the frameworks effectively. The choice between the two will ultimately depend on the user’s familiarity with deep learning concepts and their specific preferences in terms of ease of use and flexibility.

Performance and Scalability

When it comes to deep learning frameworks, performance and scalability are crucial factors to consider for handling large datasets and complex models efficiently.

Performance Benchmarks of TensorFlow

TensorFlow is known for its high performance capabilities, especially in tasks like image recognition and natural language processing. It provides strong support for distributed computing, allowing users to train models across multiple GPUs and even on large clusters of machines. TensorFlow’s eager execution mode also contributes to faster iteration and debugging of models.

- TensorFlow has shown impressive performance in various benchmarks, such as the ImageNet Large Scale Visual Recognition Challenge, where it has consistently achieved top results.

- Its ability to optimize performance through techniques like automatic differentiation and graph optimization makes it a popular choice for deep learning tasks.

- TensorFlow’s integration with specialized hardware accelerators like TPUs (Tensor Processing Units) further enhances its performance for training large models.

Scalability Features of TensorFlow vs PyTorch

When it comes to scalability, TensorFlow offers robust features for handling large datasets and models efficiently. Its distributed computing capabilities allow users to scale training across multiple devices seamlessly. TensorFlow also provides tools like TensorFlow Serving for deploying models at scale in production environments.

- TensorFlow’s data parallelism and model parallelism techniques enable efficient training of models on distributed systems, making it suitable for handling large-scale projects.

- On the other hand, PyTorch has been working on improving its scalability features, with the introduction of PyTorch Lightning, a lightweight wrapper for PyTorch that simplifies the process of scaling models to multiple GPUs.

- However, TensorFlow’s long-standing focus on scalability and performance optimization gives it an edge when it comes to handling large datasets and complex models in production settings.

Flexibility and Extensibility

When it comes to building custom models and conducting research in machine learning frameworks like TensorFlow and PyTorch, flexibility and extensibility play a crucial role. Let’s explore how these two platforms differ in terms of accommodating unique requirements and integrating new modules and libraries.

Flexibility of TensorFlow for Custom Model Implementations, TensorFlow vs PyTorch

TensorFlow offers a high level of flexibility for implementing custom models due to its extensive APIs and wide range of pre-built layers and operations. Developers can easily create complex neural network architectures and experiment with various model configurations. Additionally, TensorFlow’s eager execution mode allows for dynamic graph construction, making it easier to debug and iterate on models.

- TensorFlow’s Keras API provides a user-friendly interface for building custom layers and models.

- Users can leverage TensorFlow’s low-level operations to define custom loss functions, optimizers, and metrics.

- The TensorFlow Serving library enables seamless deployment of custom models in production environments.

Extensibility Features of PyTorch for Research and Experimentation

PyTorch is known for its strong extensibility features, making it a popular choice among researchers and practitioners in the machine learning community. The framework allows for easy customization of neural network components and provides a dynamic computation graph that facilitates experimentation.

- PyTorch’s torch.nn.Module class simplifies the creation of custom neural network architectures.

- Users can easily define custom autograd functions to implement complex operations not natively supported by PyTorch.

- The ability to seamlessly integrate with popular libraries like NumPy and SciPy enhances PyTorch’s extensibility.

Ease of Integrating New Modules and Libraries

Both TensorFlow and PyTorch support the integration of new modules and libraries, allowing developers to leverage existing tools and resources to enhance their machine learning workflows. While TensorFlow has a larger ecosystem of pre-built components and extensions, PyTorch’s flexible architecture enables seamless integration with external libraries.

- TensorFlow’s TensorFlow Hub provides a repository of reusable machine learning modules that can be easily integrated into TensorFlow workflows.

- PyTorch’s TorchServe facilitates the deployment of PyTorch models as web services, enabling efficient integration with external applications.

- Both frameworks support interoperability with popular deep learning libraries like TensorFlow Addons and PyTorch Lightning.

Industry Applications

When it comes to industry applications, both TensorFlow and PyTorch have made significant contributions in various sectors. Let’s explore some examples of industries where these frameworks are predominantly used and compare their applications.

TensorFlow Applications

- Healthcare: TensorFlow is widely used in healthcare for tasks such as medical imaging analysis, disease detection, and drug discovery.

- Finance: In the finance industry, TensorFlow is utilized for fraud detection, risk assessment, algorithmic trading, and personalized banking services.

- Automotive: TensorFlow is employed for autonomous driving systems, vehicle diagnostics, predictive maintenance, and traffic prediction.

PyTorch Applications

- Research: PyTorch is popular among researchers for its flexibility and ease of use, making it a preferred choice for academic research projects in various fields.

- Natural Language Processing (NLP): PyTorch is extensively used in NLP tasks such as language translation, sentiment analysis, text generation, and speech recognition.

- Computer Vision: PyTorch is commonly used in computer vision applications like object detection, image segmentation, facial recognition, and video analysis.

Reasons Behind the Choice of Framework

The choice of framework often depends on factors such as the specific requirements of the industry, the nature of the tasks involved, the expertise of the team, and the level of community support. TensorFlow’s strong presence in industries like healthcare and finance can be attributed to its robust architecture, scalability, and extensive pre-trained models. On the other hand, PyTorch’s popularity in research and NLP is due to its dynamic computation graph, easy debugging capabilities, and support for dynamic neural networks. Each framework offers unique advantages that cater to the specific needs of different sectors.

Community Support and Documentation

Community support and documentation play a crucial role in the success and adoption of machine learning frameworks like TensorFlow and PyTorch. Let’s analyze the availability and quality of support in both frameworks and discuss the importance of community contributions.

TensorFlow Community Support

TensorFlow, being one of the most popular deep learning frameworks, boasts a large and active community. Users can seek help from various forums, such as Stack Overflow, GitHub issues, and dedicated TensorFlow community forums. The TensorFlow team also provides extensive documentation, tutorials, and resources to aid users in learning and utilizing the framework effectively.

- The TensorFlow community is known for its responsiveness and willingness to help beginners and experienced users alike.

- Regular updates and contributions from the community ensure that TensorFlow remains relevant and up-to-date.

- The availability of pre-trained models, code snippets, and libraries shared by the community further enriches the TensorFlow ecosystem.

PyTorch Community Support

PyTorch, on the other hand, has gained popularity for its user-friendly interface and dynamic computation graph capabilities. The PyTorch community is also vibrant and offers support through forums like Discuss PyTorch, GitHub issues, and other social media platforms. The PyTorch team provides detailed documentation, tutorials, and examples to assist users in understanding and implementing the framework effectively.

- The PyTorch community is known for its innovative contributions and active engagement with users through workshops, meetups, and online events.

- Community-driven projects and extensions enhance the functionality and usability of PyTorch, catering to diverse use cases and applications.

- Collaboration with academic and industry experts ensures that PyTorch remains at the forefront of research and development in the deep learning community.

Community support and documentation are essential for users to overcome challenges, learn best practices, and stay updated with the latest advancements in machine learning frameworks.

In conclusion, the battle between TensorFlow and PyTorch continues to fuel innovation and drive advancements in the field of machine learning. As researchers and developers harness the power of these frameworks, the possibilities for groundbreaking discoveries are limitless.

When it comes to advanced data visualization , utilizing the right tools is crucial. From interactive dashboards to complex graphs, these techniques allow businesses to make sense of large datasets and identify trends. By incorporating innovative methods in data visualization, companies can enhance decision-making processes and gain a competitive edge.

Exploring the world of data analysis requires an understanding of the top data visualization tools available. From Tableau to Power BI, these platforms offer a range of features to create impactful visualizations. By harnessing the power of these tools, businesses can present complex information in a clear and concise manner, driving insights and driving growth.

While both data mining and data analysis involve extracting insights from data, there are key differences between the two processes. Data mining vs data analysis focuses on uncovering patterns and relationships within datasets, while data analysis involves interpreting and summarizing information. Understanding these distinctions is essential for businesses looking to leverage data for strategic decision-making.